Introduction

As high-performance computing (HPC) workloads and computational fluid dynamics (CFD) computations are increasing in size, the need for resources is growing. This post will demonstrate the fundamentals of running FieldView on the Amazon Web Services (AWS) cloud compute resources using the free t2.micro instance type offered by AWS. We will limit this blog discussion to the free tier of AWS compute instances for purposes of demonstration, noting that the minimal RAM which is provided will allow running of FieldView servers on the cloud, but not have enough resource to run interactively with the FieldView Client via NICE DCV remote display (provided with AWS), or to read in CFD data of substantial size. For those purposes, you’ll need instances not offered for free by AWS.

The goal of this article is to help you attain a level of comfort working in the cloud, with emphasis on ensuring your experience is at least as good as on a local machine, installing the needed software quickly and tailoring RAM, SSD, CPU and GPU resources to your needs. This post was greatly inspired and adapted from the post from Astrid Walle – “Postprocessing on AWS with Tecplot.”

Quick Links to Major Sections

- Before You Get Started

- Set up FlexNet Publisher License Manager for FieldView

- Configure the License Server

- Set Up AWS Parallel Cluster

- Create the Parallel Cluster

- Install FieldView 20 on the Cluster

Before You Get Started

Create an AWS Account

Before you can start, you need to create a user account on AWS. Your account will come with 12 months of free tier access, which you can also use for your postprocessing jobs. Also, you need to install and make yourself familiar with the AWS CLI on your local machine. Additionally, you might want to:

- Create an IAM role instead of using your AWS root user. Once you have used this guide setting up your free AWS account (with minimal compute) you may want to create IAM roles and set up instances having greater resource appropriate for handling CFD cases of considerable size.

- Select the appropriate AWS region for your postprocessing. All instances for the setup described in this guide need to be launched in the same region. You should choose your region based on criteria such as which one is closest (to provide faster response) or perhaps closest to your existing data center if you are connecting it to AWS. There might also be legal requirements of which Region to use (based on data governance, privacy laws, etc.)

Obtain FieldView Network License

This how-to guide requires a FieldView network license running on a Linux instance. We will install the License Manager, and run commands to produce the instance’s ID and send it to passwords@tecplot.com to obtain a key (a license file.)

Create SSH Key Pairs

For accessing your created AWS Virtual Machines later without having to enter credentials, you need to create an ssh key pair on your local machine. On Linux/Mac this works with ssh-keygen. On Windows you can use a client like PuTTY. More information can be found in AWS documentation.

Once you’ve created your public and private keys, following the steps in the AWS documentation, you’re ready to import the SSH key pair to AWS.

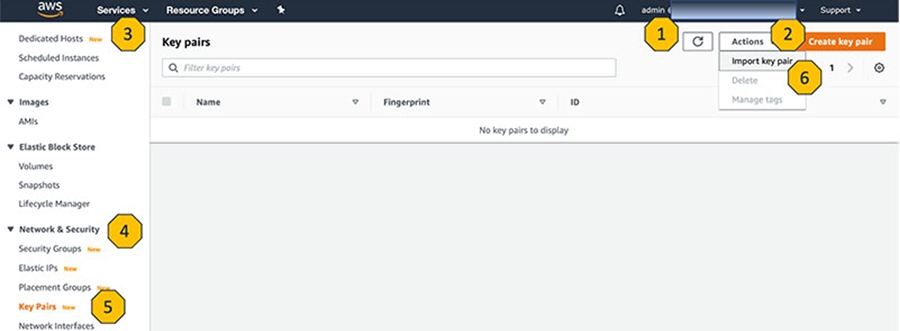

Now you go to the AWS Console (Figure 1) check the settings for user account (1), region (2) and select Services (3) -> EC2 -> Network & Security (4) -> Key Pairs (5) -> Import Key Pair (6).

Figure 1: AWS Console EC2 – Network & Security – Key pairs

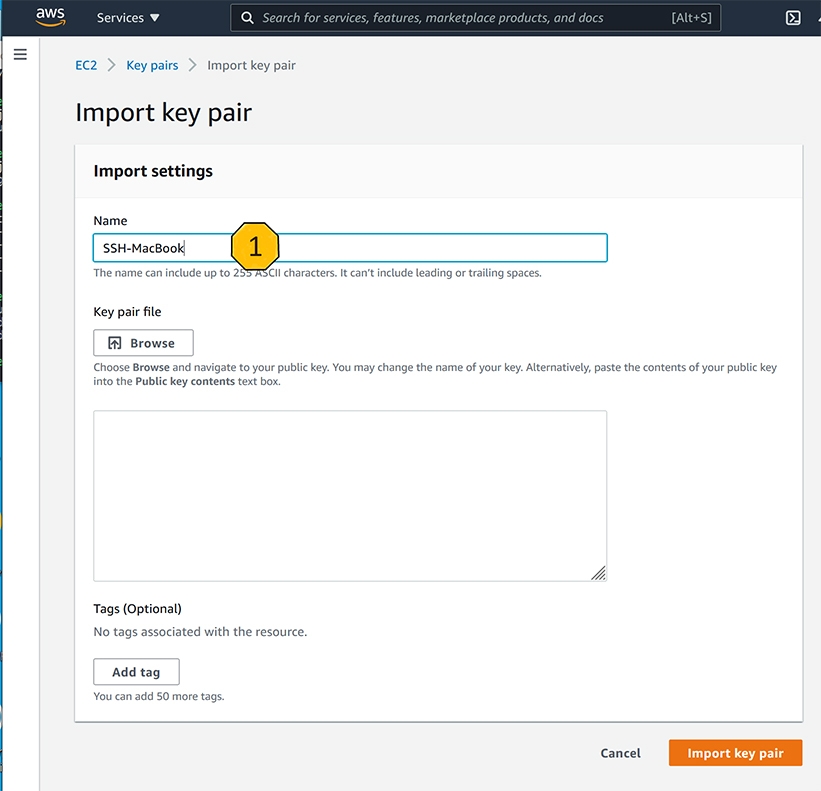

Figure 2: AWS console Import key pair

In the new dialogue (Figure 2), you should give a unique, recognizable name (1), paste the content of the public key file, which you created on your local machine (default path .ssh/id_rsa.pub or C:\Users\<username>\.ssh\id_rsa.pub) and import it (2).

Note: There are several ways to generate and use key pairs, and the AWS Workshop would be a good source for information on this subject if you have any trouble.

Set up the FlexNet Publisher License Manager for FieldView

To run FieldView on AWS you first need to create a small compute instance that will act as your license server. This instance needs little resource and can take advantage of the AWS free tier of compute instances.

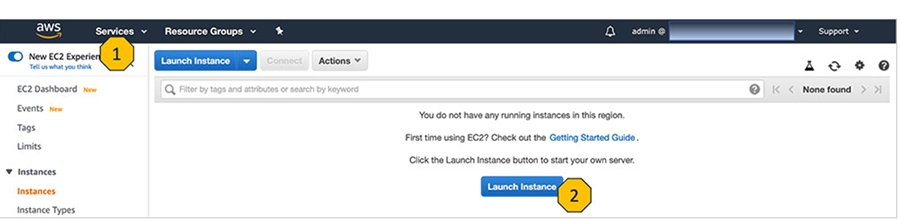

To set up the compute instance for your license server (Figure 3), go to Services (1), select EC2 and then Launch Instance (2). If you need to start an instance more often you can of course script the procedure, but for explanation here, I will describe the manual process. (Note that AWS has updated this and other screenshots; But the procedure remains essentially the same.)

Figure 3. AWS Console-EC2 Launch Instance

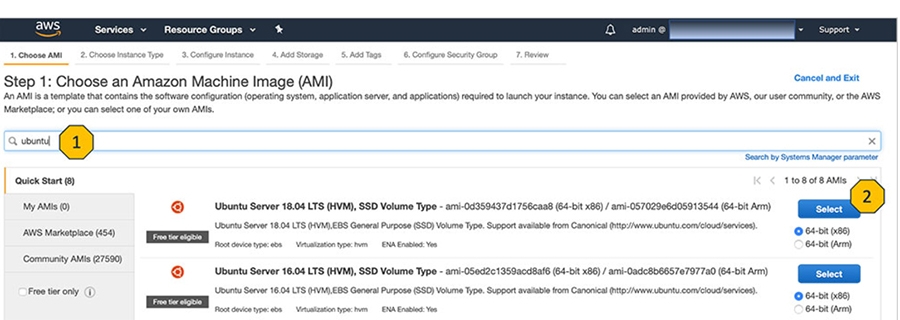

First, select the AMI you want to have installed on your instance (Figure 4). There are many AMI’s available. You can search for them (1) and then select the desired one (2). You should keep in mind that some license managers have strict OS requirements, so you need to check that upfront. Please use Linux as a platform for your FieldView License Manager. While producing this blog, we chose Ubuntu.

Figure 4. AWS Console AMI Selection

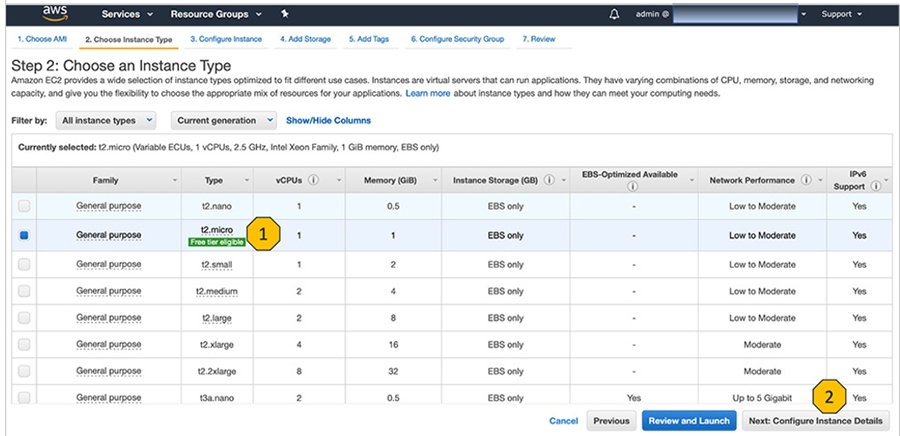

In the next step, you need to select the instance type. Here you can find a table listing the specifications and prices. The license manager does not need much compute resource, so we can make use of the free tier. Select the t2.micro instance, Configure Instance Details (1) and (2) in Figure 5.

Figure 5: AWS Console Instance Type

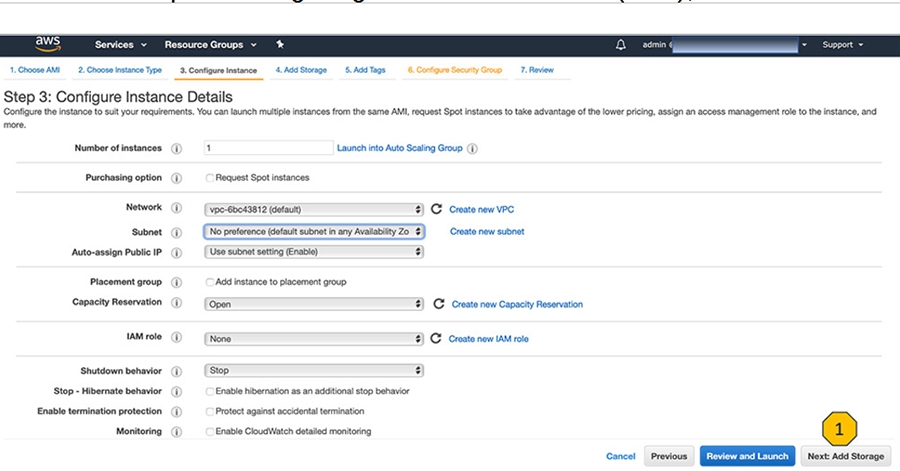

In the next step for configuring the instance details (EC2), stick to the default settings, and press Add Storage (1) shown in Figure 6.

Figure 6: AWS Console Configure Instance Details

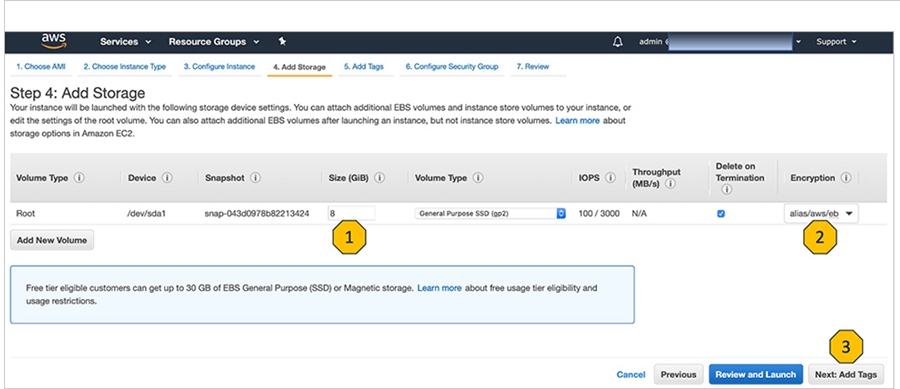

For the storage selection (Figure 7), 8GB (1) is sufficient for our needs. You will just need to select encryption (2) to increase data security, then press (3) to add tags.

Figure 7: AWS Console Add Storage

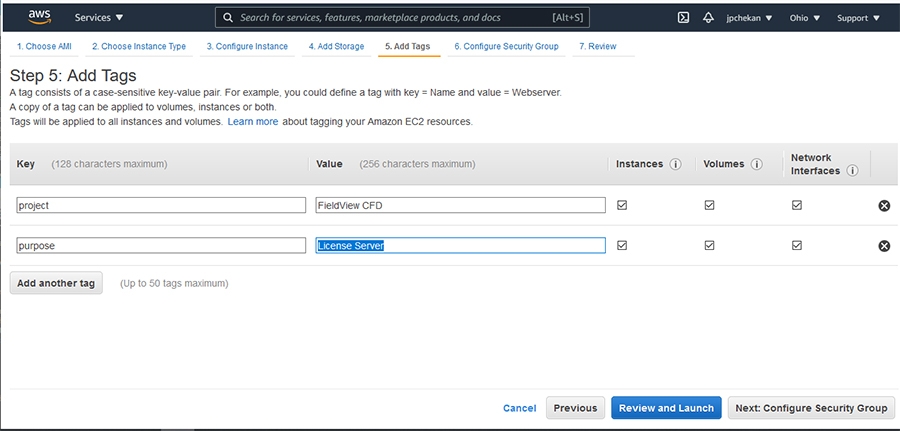

Adding tags (Figure 8) is recommended for any cloud project because these tags are searchable and can help a lot with the bookkeeping.

Figure 8: AWS Console Add Tags

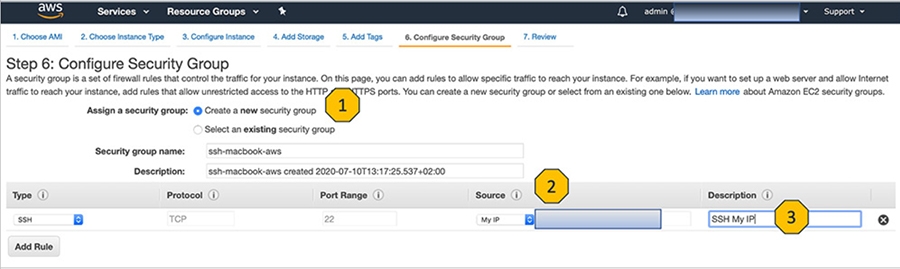

In the last step (Figure 9) we create a new security group ssh-macbook-aws to which your instance is added. This is for the ssh access from your local machine, else a default security group called launch-wizard-N will be created, open to the world. Later, we will also add another security group to ensure communication between the license server and the instance on which FieldView will run.

Figure 9: AWS Console EC2 – Network & Security – Key pairs

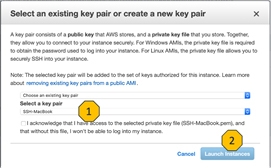

Figure 10: AWS Console Select Key Pair

When launching the instance, you will be asked for a key pair (Figure 10). Here you select the key pair generated earlier (1) in Figure 2.

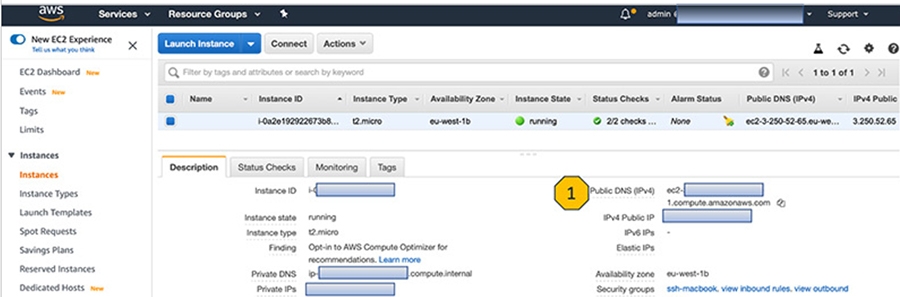

Now you are done with the setup! In the EC2 Instances view (Figure 11) you can see the details for your license server. To connect to the VM you will need the Public DNS (1).

Figure 11: AWS Console EC2 Instances Overview

Configure the License Server

Now that your license server instance is running, you can install and run the FlexNet Publisher License Manager version 11.16.2.1 we use for FieldView.

Depending on the selected AMI, the username varies. In our case it is ubuntu. Now you need to get the Linux installation files for the license manager by downloading the FlexNet License Utilities from this link on the Customer Portal. (You’ll need to have an account there.) Once you’ve downloaded that to your local system, you can push these up to your AWS license instance:

> scp -i “<your Private Key File>” LicenseManagerUpdate_v11d16.tgz ubuntu@ec2-<public DNS>

> scp -i “<your Private Key File>” ilight_virt_linux-v11.16.tgz ubuntu@ec2-<public DNS>

Then log in to the instance to install and set up the License Manager. Your AWS EC2 console Connect option will provide you with login syntax for your specific instance.

> ssh ubuntu@ec2-<public DNS>

We recommend you create a directory /home/ubuntu/FV_license_manager as a location for installing the License Manager.

Unpackage the .tgz file, and make sure the install script is executable:

> mkdir /home/ubuntu/FV_license_manager

> cd /home/ubuntu/FV_license_manager

> tar xvzf FVLicenseManager_v11d16.tgz

> chmod a+x installcd_flexlm.sh

And run ./installcd_flexlm.sh and follow the prompts to install the licensing utilities for the Linux platform

> ./installcd_flexlm.sh

And when prompted, choose directory /home/ubuntu/FV_license_manager

The installation process will complete, providing instruction to then run fv_server id. Send the output to passwords@tecplot.com to obtain your your license file. However, before you run that command, this License Manager from Flexera will need two soft links so that it can run properly, created by root user. For example:

> sudo ln -s /var/tmp /tmp

> sudo ln -s /lib64/ld-linux-x86-64.so.2 /lib64/ld-lsb-x86-64.so.3

As you wait for us to issue your license, please install the license DAEMON required for the virtual Linux OS (VM), renaming the standard DAEMON, as follows:

> cd /home/ubuntu/FV_license_manager/fv/linux_amd64

> mv ilight ilight.standard

Then, install your new VM DAEMON “ilight_virt”:

> tar xvzf ilight_virt_linux-v11.16.tgz

Once you receive file license.dat, place it into the ‘data’ folder of the License Manager and start the License Manager:

> /home/ubuntu/FV_license_manager/fv/bin/fv_server start

The logfile /home/ubuntu/FV_license_manager/fv/flexlm/flexlm.log can be examined to ensure the license has started correctly.

You can also use the diagnose and status arguments (instead of ‘start’) to the fv_server command to check status. Contact support@tecplot.com if you need help getting the License Manager started properly.

Once started, The FieldView License Manager on this instance can now serve FieldView seats to any system that can contact it.

It s recommended that the start command be placed in a script (as root user) that gets executed at boot time.

With ubuntu, this would be a file /etc/rc.local

#!/bin/bash

/home/ubuntu/fv_license_manager/fv_server start

Set up AWS Parallel Cluster

This guide focuses on simplicity and user experience, so the selected setup is based on the AWS service parallelcluster. This is not only the perfect service for easily deploying your compute fleet in the cloud, but it also comes with NICE DCV – high performance remote desktop and application streaming – and that’s what we want to take advantage of.

However, the free instances have so little resource that we will not exercise DCV. With DCV and FieldView running, there will not be enough resource available to read in CFD data of even minimal size, as they may make the instance unstable.

A good overview and workshop for getting started with parallel cluster can be found in the AWS HPC Overview.

This will require a number of steps to setup:

- Create a new security group so our license server and parallel cluster can communicate securely.

- Create an AWS Cloud9 instance, which is used to configure the parallel cluster.

- Configure the parallel cluster itself.

Create a New Security Group

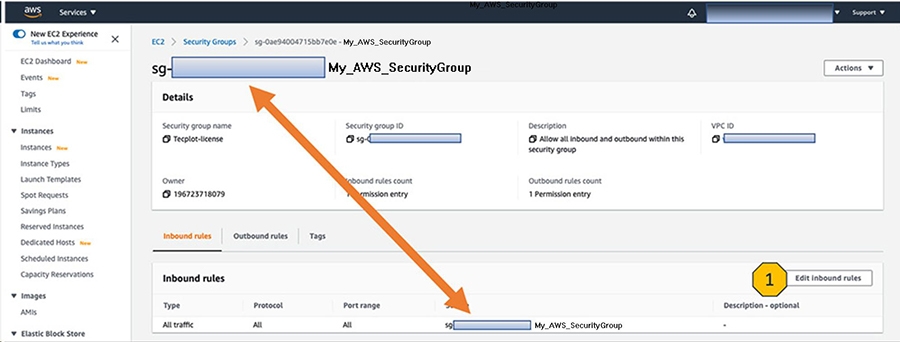

And now we go back to the AWS console (Figure 12) to create a new security group, in which we allow all inbound / outbound traffic from and to this security group itself. As this is not totally straight forward, here are the steps listed to achieve the correct settings:

- Create a new security group and modify the inbound and outbound rules.

On page: https://us-east-2.console.aws.amazon.com/ec2, select Security Groups. Above (in Figure 9), we show the name ssh-macbook-aws was used for the Security Group name while creating the license server instance. Select that security group, and use . Copy to New Security Group,

naming it My_AWS_SecurityGroup. - Edit the security group inbound and outbound rules to Type: “All traffic” and Destination: Custom, selecting this security group (itself) from the search field.

Figure 12. Edit Inbound/Outbound rules

Figure 13: AWS Console Additional SG

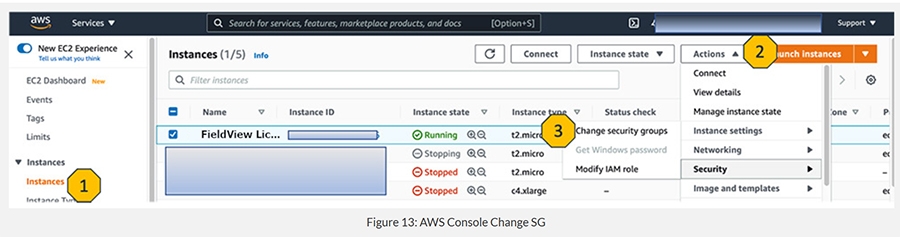

And then we add our license server EC instance (which we had named FieldView License Instance as it was created) to this security group. Also we add the compute instances on which we will launch FieldView 20 to this security group (Figure 13). By doing so we ensure a secure connection between our instances. Points 1, 2, and 3 in Figure 14 correspond to select Instances, click on Actions, and Change Security Groups.

Figure 14: AWS Console Change SG

Create the Cloud9 Instance

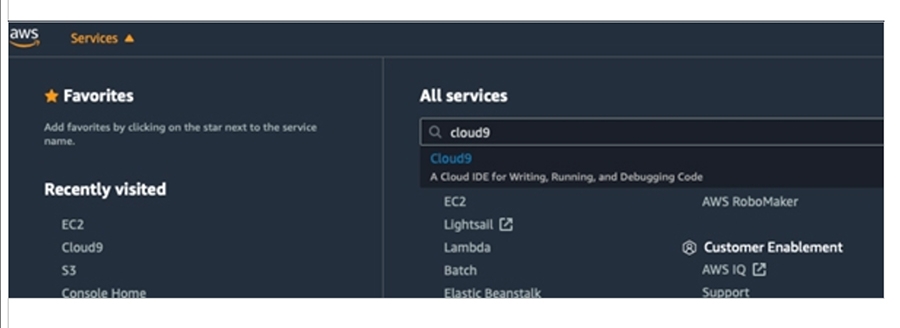

A Cloud9 instance gives you a web-based console which can be used for configuring your AWS parallel cluster. To create this instance, use the AWS search to find Cloud9 (Figure 15). And click on “Create environment” (Figure 16).

Figure 15. AWS console All Services

Figure 16: AWS Console Settings for Cloud9 Instance

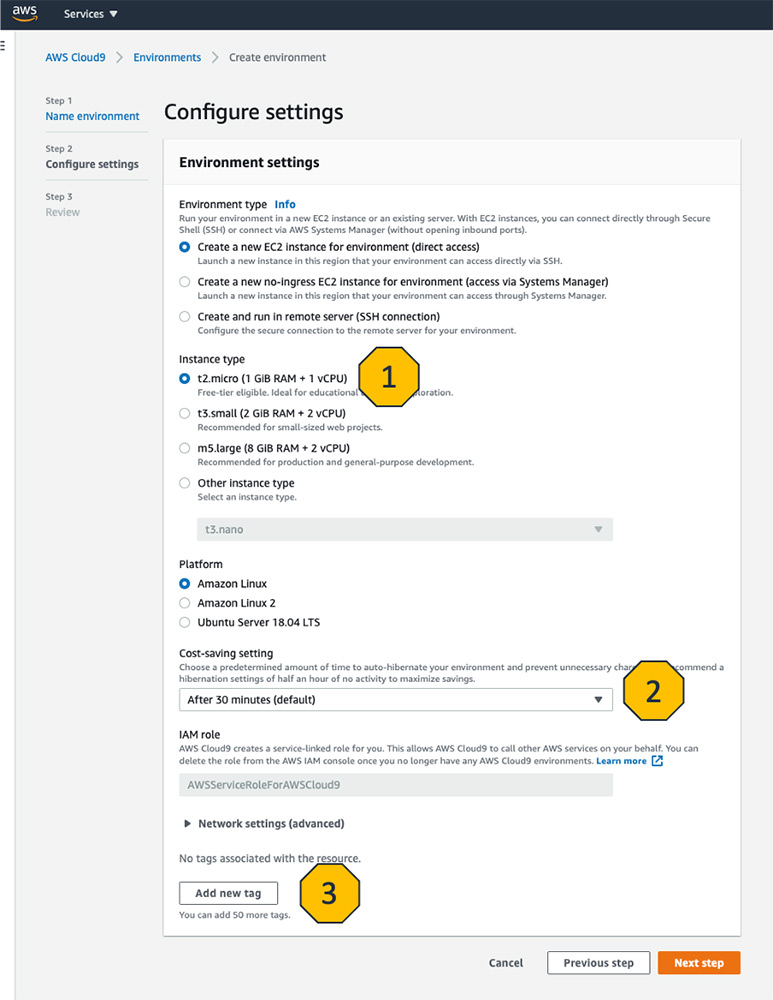

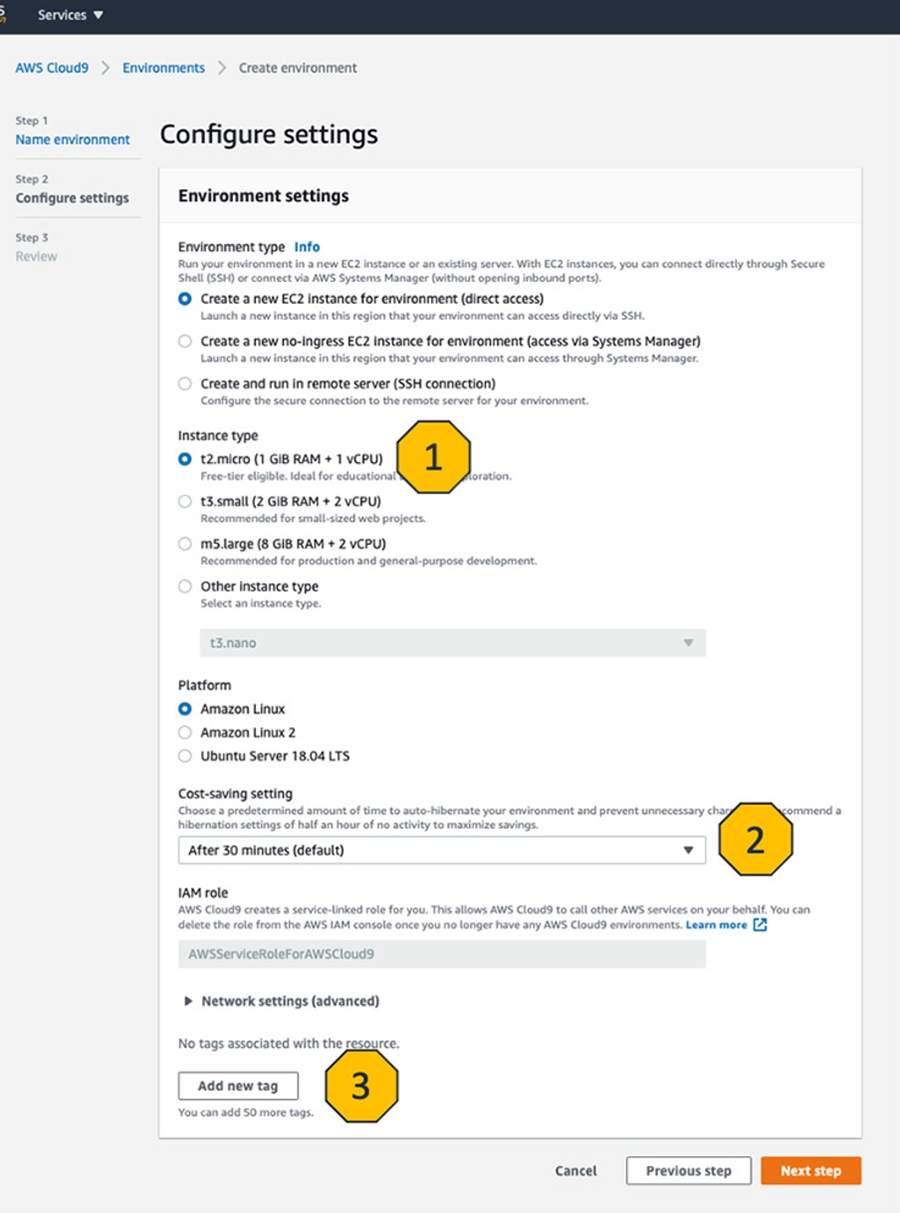

For creating an environment, we need to provide a name and description (not pictured). Apart from that we can stick to the default settings (Figure 17a). That includes the t2.micro instance (1) again, which is free tier eligible, also the instance will be stopped automatically when idle for more than 30 minutes (2). And remember to give tags for this instance as well (3), something like “project=FieldView”.

Figure 17a: AWS Console Settings for Cloud9 Instance

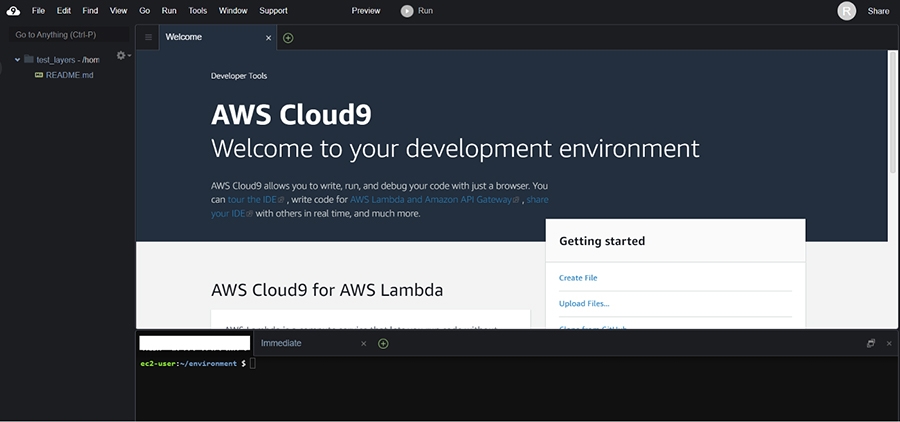

Figure 17b. The Cloud9 instance will will open the IDE in the browser.

Once you’ve confirmed creation of the Cloud9 instance, it will open the IDE in the browser (shown above). For configuring our Cloud9 instance and preparing the launch of our parallelcluster we start with the installation of AWS CLI and parallelcluster as well as the creation of a ssh key-pair:

> pip3 install awscli -U --user

> pip3 install aws-parallelcluster -U --user

> aws ec2 create-key-pair --key-name lab-2-your-key --query KeyMaterial --output text > ~/.ssh/id_rsa

> chmod 600 ~/.ssh/id_rsa

(If pip3 is not already installed on your Cloud9 instance, you can call python3 –mpip instead.)

Create the Parallel Cluster

To access a parallel cluster on AWS effectively requires three steps:

- Configure

- Create

- Connect

Configure the Cluster

The first step in creating an AWS parallel cluster is the creation of a config file which defines how the cluster is to be set up. A step-by-step guide can be found here and the documentation for all options here. Also there is a sample file attached to this article or available on GitHub, with which you can get started.

Values for two important settings can be obtained directly from the command line in the Cloud9 console using the commands, as described here:

> SUBNET_ID=$(curl --silent http://169.254.169.254/latest/meta-data/network/interfaces/macs/${IFACE}/subnet-id)

> VPC_ID=$(curl --silent http://169.254.169.254/latest/meta-data/network/interfaces/macs/${IFACE}/vpc-id)

Once you’ve run these commands you can check the contents using the echo command:

> echo $SUBNET_ID

> echo $VPC_ID

The command pcluster configure will prompt for information such as Region, VPC, Subnet, Linux OS, and head/compute node instance type. Our recommendation is that you let pcluster configure prompt you, where you can select defaults at most of the prompts. Please note that you should not let the following settings default:

> pcluster configure

Be sure you select the key pair you’ve created above, if more than one are found:

EC2 Key Pair Name [aws_id]:

Select No for Automate VPC creation: (Again, open the instance to obtain those values, or run the above commands in your AWS CLI shell.)

Automate VPC creation? (y/n) [n]: Do not allow automated VPC creation. Select the

Allowed values for VPC ID:

# id name number_of_subnets

--- ------------ ------ -------------------

1 vpc-ac46cac7 9

Select No for Automate Subnet creation: (Again, open the instance to obtain those values, or run the above commands in your AWS CLI shell.)

Automate Subnet creation? (y/n) [y]: n

Allowed values for head node Subnet ID:

Allowed values for compute Subnet ID:

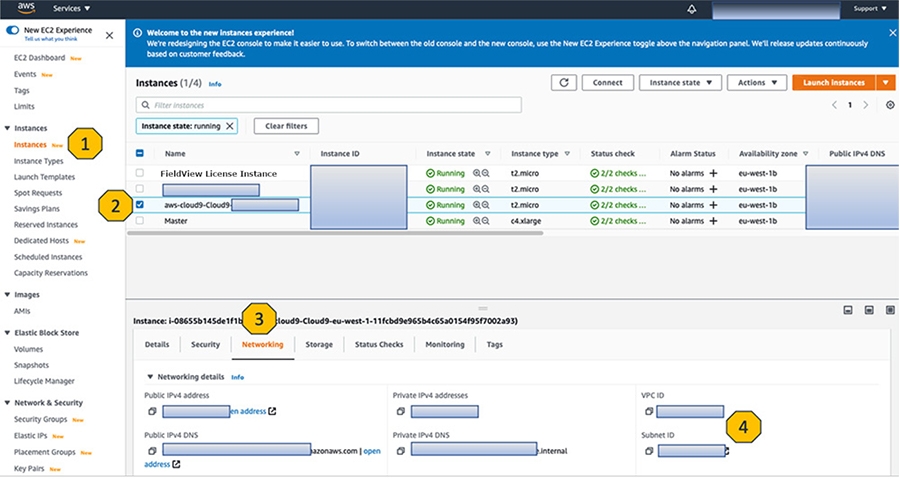

In the EC2 instances (Figure 18) overview (1) you should ensure that your Cloud9 instance (2) is in the same VPC and subnet (4) as your license server. These are exactly the values you also need to provide for your parallel cluster and the head node on which we will install FieldView 20.

Note: This demonstration will not exercise use of the DCV Viewer to use a desktop session, but to do so, there would need to be a dcv section of the config file you’ve created, for example:

dcv_settings = default

[dcv default],

enable = master

Depending on your priorities you should pay special attention on the selection of the instance types for head node and compute fleet. Here you can go for a lot of compute power or you can go for free with t2.micro. Although this is not the recommended instance type to use with DCV Viewer, it does work with low compute load. So it’s a good choice if you just want to get started and give it a try at no costs. Here you can find more examples and explanation for the usage of DCV Viewer with AWS instances. The default location for the config files is in ~/.parallelcluster/.

Figure 18: AWS Console check Cloud9 Instance

Create the Cluster

If you have properly set up the config file, you can start the head node (creating the cluster) with the following command:

> pcluster create my-cluster -c ~/.parallelcluster/config

The creation will take some minutes, you can check for the status

> pcluster status my-cluster

Connect to the Cluster

When the creation step has completed, you can connect to the head node via ssh (or DCV, if you’ve taken those steps, and have enough resource on your instance.)

> pcluster ssh my-cluster

Again, we will not be demonstrating use of NICE DCV to be able to access the graphical desktop session. However, if you have instances with enough resource, you would open web-based DCV desktop to your head node using the following command. This command will either open a browser window on your machine or will display a URL in the terminal, which you can copy & paste into your browser.

> pcluster dcv connect my-cluster

Note: Another possibility for further improving the user experience due to easier handling of keyboard, mouse and shortcut inputs is the usage of the NICE DCV native client, which is available for Linux, Windows, and Mac and where you can establish the connection with the same provided URL.

Install FieldView 20 on the Cluster

Now we can start with the FieldView 20 Linux installation just as if we were on a local machine with a clean OS, but already with AWS CLI and the right credentials installed to access your AWS services. On your local workstation, be sure you have an account at the Customer Portal. Then download the FieldView Client from the downloads page.

Once you’ve downloaded that to your local system, you can push this up to your AWS license instance. The FieldView Client package contains serial and parallel servers as well, so there’s no need to upload the stand-alone servers package.

Your public DNS can be found on the instance details of AWS Instances for the master. The Connect option will show you the ssh syntax for connecting.

scp -i "<your Private Key>" fv20-linux_amd64.tar.gz ec2-user@ec2-<public DNS>

Then return to your AWS CLI console, or login via ssh, and install the package.

mkdir FV20 ; cd FV20

tar xvzf $HOME/fv20-linux_amd64.tar.gz

./installfv.sh

When prompted, enter the full pathname to the FV20 directory you’ve created. (There is no need to install the license server when prompted.) Now, you can execute the installed demonstration script without licensing to confirm FV has been correctly installed:

cd /home/ec2-user/FV20/fv/demo

> ../bin/fv -s f18_show.scr -demo -batch -software_render

Finally, access the license running on your licensing instance, by using an environment variable, as follows:

> ILIGHT_LICENSE_FILE=1602@ip-<license_server_IP> ../bin/fv -demo -s f18show.scr -batch -software_render

With the above, you now have the FieldView Client (available for remote batch runs) as well as servers (for client-server operation from your local system, given you are properly configured.) Note that only the FieldView Client needs to find a license.