In this video, you will learn how to experience significant speedups and maximize your performance in FieldView. To achieve these speedups and performance improvements, you can run FieldView in Parallel.

In this video, you will learn how to experience significant speedups and maximize your performance in FieldView. To achieve these speedups and performance improvements, you can run FieldView in Parallel.

FieldView MPI Parallel

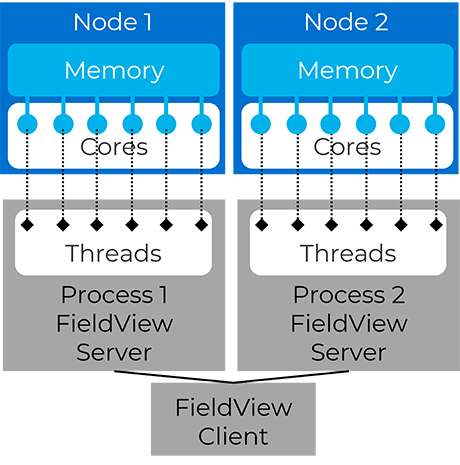

Let’s start by reviewing some of the FieldView Parallel basic principles. FieldView uses hybrid parallelization, which is a mix between multi-threading, where multiple threads are used for each process like computing streamlines or formulas, and MPI Parallel, which can efficiently distribute the loads of heavy operations by running processes on all available nodes. This video’s focus is MPI Parallel.

The result of using FieldView MPI Parallel has been faster read times and improved performance with heavy operations. Let’s walk through some real cases that demonstrate the benefits of MPI Parallel.

Case #1: Unstructured Grid from Fluent

The first case is an unstructured simulation from Fluent where the grids are of many varied sizes. For the benchmark, the case was read into FieldView, the geometry of the Formula One car was computed, and the isosurface was computed using the native Q-criterion calculation in FieldView. Even though the grids weren’t fully balanced, we still see a 3x speedup on eight cores versus serial. When we go to 64 cores, we see a 4X speedup. These are good performance improvements when taking advantage of MPI Parallel.

Case #1: Unstructured Grid from Fluent – 3x speedup on 8 cores and 4x speedup on 64 cores.

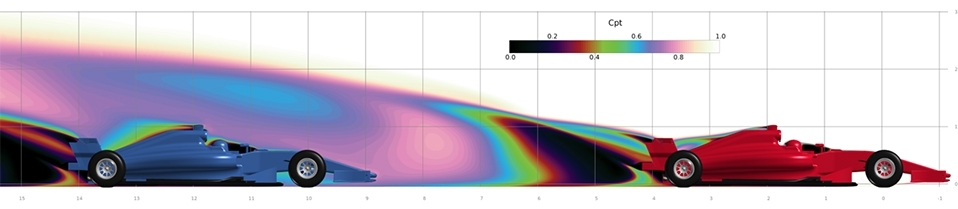

Case #2: Unstructured Grid from OpenFOAM

The second case is an unstructured dataset from OpenFOAM. It is made of two Formula One cars following each other. It’s an ideal case for MPI Parallel because the grids are equally balanced. Performing the same benchmark as the previous case, we see a 4x speedup with eight cores from serial, and a 14x speedup with 64 cores. This gives you an idea of what is possible when MPI is fully utilized.

Case #2: Unstructured Grid from OpenFOAM – 4x speedup on 4 cores, 14x speedup on 64 cores.

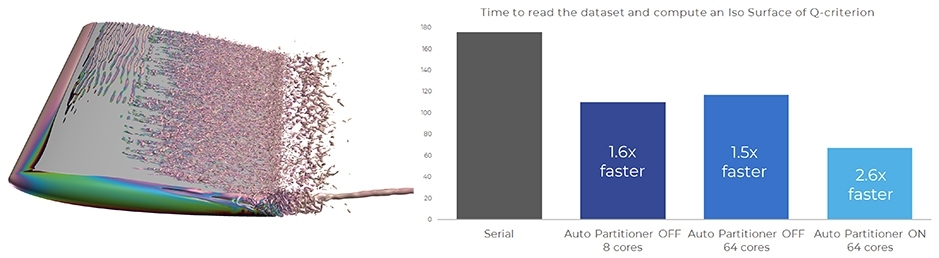

Case #3: Unbalanced Structured Grids

Now, let’s look at a third case that has unbalanced structured grids. The grids are partitioned into 5 large grids and 12 smaller ones. With this imbalance, we don’t experience the same performance improvements as we did in the previous cases. Unless something is done to balance the partitions, the speedups with MPI Parallel are minor. Luckily, FieldView has a solution in the form of our Auto Partitioner. The Auto Partitioner takes care of load balancing issues where large blocks are preventing an even distribution across processes. Embedded in FieldView, it does a real-time partition on the fly, smartly cuts up the structured grids, and evenly redistributes the new partitions across available nodes. This allows you to take full advantage of the MPI Parallel capabilities in FieldView.

The Auto Partitioner

Now, let’s look at Case #3 again with the Auto Partitioner applied (see video at timestamp 2:52). You will now see better speedups and performance improvements.

Case #3 with Auto Partitioner applied – 2.6x faster on 64 cores

Workflows that Benefit from FieldView MPI?

A question often asked is “Will my workflow benefit from MPI Parallel?” The operations where you’ll experience the fastest speedups (video timestamp 3:00) are things like data input and computing isosurfaces. Operations like rendering are not affected by MPI. In the example (video timestamp 3:23), cut planes are being rendered at separate locations. When MPI Parallel is utilized, you’ll see a huge improvement with the data input, a good speedup with coordinate services, but no difference with the rendering operation.

Transient Animation

Another example (video timestamp 3:46) is a transient animation where the data is input, an isosurface is computed, and the image is rendered. This cycle is repeated for all timesteps. We see a huge speed improvement in this case because operations such as data input and isosurface computation are significantly impacted by MPI.

Try FieldView MPI

Try using MPI on the cases you are running today. Most common FieldView licenses can run parallel on up to eight cores, so you can take advantage of this right away.

You can extend the FieldView MPI Parallel benefits by running on more than 8 cores. We will work closely with you to ensure your data is processed in the most effective way. Contact us for a further discussion about MPI Parallel and to try a parallel license with your datasets.

- Standard licenses allow local and remote parallel on up to 8 cores.

- Options are available for running on more cores.

- Our engineers will work with you to export parallel runs efficiently to fully benefit from MPI.

- Contact sales@tecplot.com with questions or for testing higher parallel options.