TecIO, Tecplot’s input-output library, enables applications to write Tecplot binary files. Its parallel version, TecIO-MPI, enables MPI parallel applications to output Tecplot’s newer binary format, .szplt.TecIO-MPI was first released in 2016. Since then, we’ve received feedback from some customers that its parallel performance for outputting unstructured-grid solution data needed improvement. So we embarked on an effort to understand and eliminate bottlenecks in TecIO-MPI’s execution.

Customer reports 15x speed-up in writing data from FUN3D when using the new TecIO-MPI library!

TecIO Library

Understanding What Customers are Seeing

To understand what our customers were seeing, we needed to be able to run our software on hardware representative of what our customers were running on, namely, a supercomputer. The problem is that we don’t own one. We also needed parallel profiling software that would help us identify bottlenecks, or “hot spots,” in our code, including in the MPI inter-process communication. We made some progress in Amazon EC2 using open-source profiling software, but had greater success using Arm (formerly Allinea) Forge software at the National Center for Supercomputing Applications (NCSA).

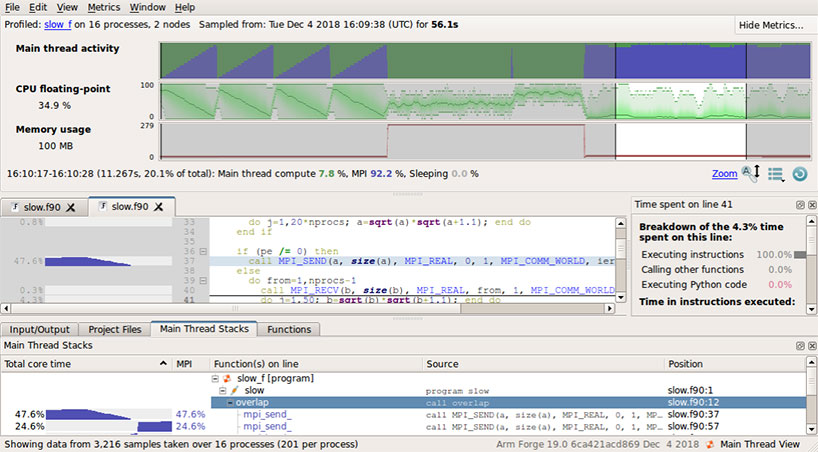

NCSA has an industrial partners program that provides access to their iForge supercomputer and a wide array of open source and commercial software, including Arm Forge. iForge has over 2,000 CPU cores and runs IBM’s GPFS parallel file system, so it was a good platform to test our software. Arm Forge, specifically its MAP profiling tool, provided the ability to easily identify hot spots in our software, and to drill down through the layers of our source code to see exactly where the performance problems lay.

An additional application to NCSA also gave us access to their Blue Waters petascale supercomputer, which features about 400,000 CPU cores and the Lustre parallel file system1. This gave us the ability to scale our testing up to larger problems, and to test the performance on another popular parallel file system.

Arm MAP with Region of Time Selected

Performance Improvement Results

Using iForge hardware and Arm Forge software, we were able to identify two sources of performance problems in TecIO-MPI:

- Excessive time spent in writing small chunks of data to disk.

- Too much inter-process exchange of small chunks of data.

Consolidating these has led to an order-of-magnitude reduction in output time. Testing with three different computational fluid dynamics (CFD) flow solvers indicates output times, for structured or unstructured grids, roughly equal to the time required to compute a single solver iteration.

How to Obtain TecIO Libraries

TecIO and TecIO-MPI, along with instructions in Tecplot’s Data Format Guide, are installed with every Tecplot 360 installation.

It is recommended, however, that you obtain and compile source for TecIO-MPI applications, because the various MPI implementations are not binary-compatible. Source for TecIO and TecIO-MPI, and the Data Format Guide, are all available via a My Tecplot account.

For more information and access to the TecIO Library, please visit:

1This research is part of the Blue Waters sustained-petascale computing project, which is supported by the National Science Foundation (awards OCI-0725070 and ACI-1238993) and the state of Illinois. Blue Waters is a joint effort of the University of Illinois at Urbana-Champaign and its National Center for Supercomputing Applications.

By Dr. David E. Taflin

Senior Software Development Engineer | Tecplot, Inc.

Read Dave’s employee profile »