Tecplot 360 vs. ParaView for CONVERGE Data

At Tecplot we know that you have choices when it comes to post-processing and that your time is important to you, so we’ve done some performance testing to help you decide which post-processor will perform the best with your data. Of course, performance is dependent on several factors – and which data type you are using is an important one. For today’s post we’ll be diving into performance with CONVERGE data only.

CONVERGE users are fortunate to have access to ‘Tecplot for CONVERGE’ (TfC) as part of their CONVERGE license. However, for users who are looking for more power than what TfC offers out of the box (i.e. batch-processing), the most popular options are Tecplot 360 or ParaView.

TfC, 360, and ParaView each include a direct data reader for CONVERGE post*.h5 files, which means that with these post-processors there’s no need to run post_convert. By avoiding running post_convert you’re saving yourself time and disk space.

Experiment

Since many CONVERGE users need to create movies of their results, we chose a reasonably large, multi-cycle internal combustion engine (ICE) simulation. This dataset is composed of 1278 timesteps, totaling 169Gb on disk.

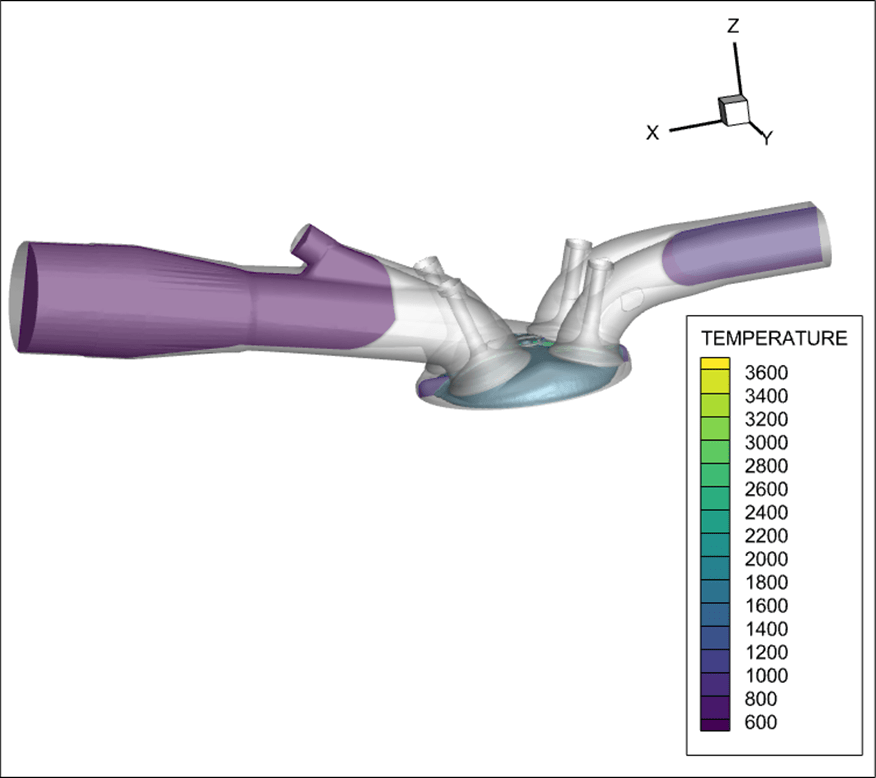

The goal is to capture the execution time and peak RAM required to create a plot which consists of a slice colored by temperature and an iso-surface of the flame front (temperature = 1700). We repeated this for each time step in the data series for a total of 1278 images produced. Figure 1 shows an example of the plot:

Figure 1: Example Image from ICE Simulation Showing Temperature & Flame Front Iso-Surface

Setup

We conducted our experiments on a Windows 10 machine with 32 logical cores, 128Gb RAM, and a NVIDIA Quadro K4000 graphics card. The data was stored locally on a spinning hard drive to avoid any slowdowns due to network traffic.

All tests were run unattended in batch mode (using PyTecplot for Tecplot 360, and pvbatch for ParaView 5.10). We used the Python memory-profiler utility to capture timing and RAM information.

Figure 2: Animation of ICE Simulation Created with Tecplot 360 Image Exports (For animation speed and file size, every 4th image was used to create this animation)

Results

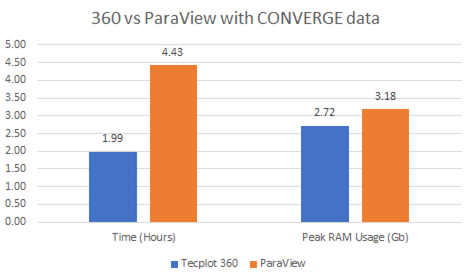

Serial Execution

The graph below shows the outcome of our tests running the processes serially (i.e. producing 1 image at a time).

Figure 3: 360 vs ParaView: Time per Image & Peak RAM Usage

See the PyTecplot script on our GitHub.

For the generated plot, Tecplot 360 uses multi-threading for a number of operations such as: deriving node-located values from the cell-centered Temperature value (a pre-requisite for iso-surface creation), slice creation, and iso-surface creation.

ParaView does not publish a list of which filters are multi-threaded, but “all common filters are [multi-threaded]” [1,2]

So, serial here means that we loaded the series of CONVERGE post*.h5 files and ran through the animation, creating an image one timestep at a time.

For the HDF5 dataset performance tests, we discovered that processing with Tecplot 360 in minimized memory mode gave the fastest processing time at 1.99 hours, ~5.6 seconds per image. 360 in minimized memory mode also held the least amount of RAM at 2.7 Gb peak RAM usage. Note, 360 defaults to keeping 30-70% of the data that it’s loaded in RAM in the event that this data may need to be re-rendered. Once the RAM meets the 70% threshold, 360 will offload stored memory until it reaches 30%. This behavior is handy in the GUI when moving back and forth between time-steps. Setting 360 to minimize memory mode prevents 360 from saving data from previous time steps in RAM.

Did we run ParaView using MPI? – The current recommendation is to only run ParaView using MPI when you can distribute the data [3]. CONVERGE data is single block, so it’s not ideal for distribution. Furthermore, the ParaView CONVERGE reader does not read in parallel [4]. As such, we did not run ParaView using MPI.

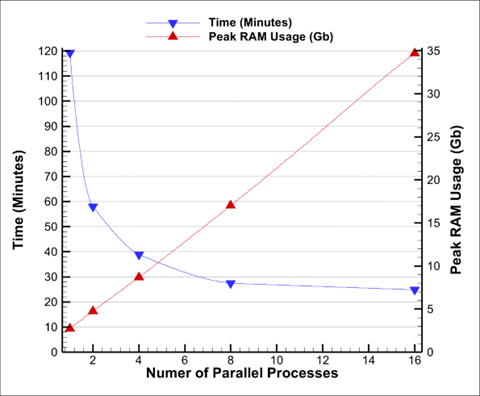

Parallel Execution

Another benefit of PyTecplot (i.e. batch mode) is that image export scripts can be written to use multiple concurrent PyTecplot processes—slashing the time to process images. We utilized our ParallelImageCreator.py PyTecplot script (located on our GitHub — see the documentation for examples) which processes multiple timesteps simultaneously to put more cores to work. In the plot below you can see how running even just two concurrent processes can drastically reduce the processing time:

Figure 4: Total time to Execute and RAM Usage against # of Parallel 360 Processes

Our script, running with 16 cores, took an average of ~1.2 seconds per image—this is 4.8 times faster than Tecplot 360’s fastest run without parallelizing the image exports and 10.7 times faster than ParaView! However, this comes at a significant cost in terms of RAM (34.7 vs 2.74 Gb, 12.6 times more RAM). We observed nearly identical speed with half the RAM when parallelizing with 8 processors vs. 16. With parallel processes you can get hardware contention (which is why we don’t see a linear improvement in performance), so it may take some experimentation with your data to find the ideal amount of concurrency to use.

Once the images were created, we recommend using tools such as ImageMagick or FFmpeg (executable shipped with Tecplot 360) where you can stitch together the exported images. You can create numerous animation types, such as .mp4, .gif, or .avi, and also tune your settings by adjusting the framerates for example. You can also take advantage of our GitHub script, to automate this process with Python.

All in all, with Tecplot 360’s batch mode, you have choices — if you have a single license seat, you can create fast animations, and with multiple cores and licenses, you can create images even faster. Let us know if you want to talk about ways we can supercharge your image processing!

References

[1] https://discourse.paraview.org/t/paraview-parallel/320/9[2] https://discourse.paraview.org/t/make-paraview-multicore-gpu/5723/2

[3] https://discourse.paraview.org/t/paraview-parallel/320/13

[4] https://discourse.paraview.org/t/a-problem-of-parallel-processing-in-paraview/5089/7